With GKE and NAT services, Google enables organizations to handle community configurations better. However, one of many vital challenges of network admins using Google’s VPC was managing the load on community endpoints. So, the search engine large launched a container-native load balancing function. Deployment of network configurations via programmable APIs helps in reaching IaC advantages. However, containerization is a better approach to deploying networks as code.

To be taught extra about containerization, learn the next listing of regularly asked questions and solutions. Hiren is CTO at Simform with an extensive experience in serving to enterprises and startups streamline their business efficiency by way of data-driven innovation. Higher velocity and decrease CPU utilization have been on high of their checklist. Containerization allows support for Agile and permits the group to overcome the dearth of maturity in SDLC. You don’t must pack too much with containers and have termination flexibility.

Useful Resource Effectivity And Density

Further, it improves collaboration between NetOps and SecOps via a single source of truth. Further, the source control turns into the one source of reality for the complete system. It allows you to have a uniform community configuration across environments.

In addition, containers are primarily based on images, which comprise software libraries and recordsdata, any of which may contain vulnerabilities. A vulnerability in one container image could infect an entire setting. These are only two examples of distinctive security threats going through containers. Containerized environments are inherently dynamic and have a massive quantity of shifting components. This dynamism arises from the multitude of providers, interdependencies, configurations, and the transient nature of containers themselves.

Top Benefits Of Containerization

Containers share frequent binaries, also called “bins,” and libraries, which could be shared between multiple container. This sharing characteristic eliminates the overhead wanted to run an OS inside every app. Understand the environmental footprint effects of IT infrastructure decisions. With no substantial overhead to attend for, the one startup delay is out of your code.

The container cluster uses computing assets from the same shared operating system, but one container does not intervene with the operation of different containers. Unlike traditional virtual machines, which require a complete working system, containers share the host system’s kernel, making them light-weight and quick. Containerization delivers a number of advantages to software developers and IT operations groups. Specifically, a container enables benefits of containerization developers to build and deploy purposes more rapidly and securely than is possible with traditional modes of growth. Containers are light-weight, moveable items that package an software and its dependencies to make sure it runs consistently throughout completely different environments.

- However, as you scale it across infrastructure, the dearth of governance can lead to failures.

- Nutanix supplies platform mobility providing you with the selection to run workloads on each your Nutanix personal cloud as nicely as the general public cloud.

- Containers are lightweight, moveable models that package deal an software and its dependencies to ensure it runs constantly across completely different environments.

- Containerization eliminated this burden and took over the duty to deal with deployment formalities.

Explore how Kubernetes allows companies to handle large-scale purposes, improve useful resource efficiency and obtain quicker software program delivery cycles. Learn how adopting Kubernetes can optimize your IT infrastructure and increase operational efficiency. This means you could have the same setting from development to production, which eliminates the inconsistencies of handbook software deployment. The popularity of specific instruments might shift and alter, but Octopus Deploy is container and cloud-agnostic. It works with a range of container registries, PaaS providers https://www.globalcloudteam.com/, Docker, and Kubernetes to help make your complicated deployments simpler.

This technology permits for workloads to be simply reconfigured when scaling adjustments are wanted. A container could be deployed to a personal datacenter, public cloud, or even a personal laptop—regardless of technology platform or vendor. Containers are additionally isolated from the host working system and may solely minimally work together with computing resources.

Container orchestration platforms like Kubernetes automate containerized purposes and services’ set up, management, and scaling. This permits containers to operate autonomously relying on their workload. Automating tasks corresponding to rolling out new variations, logging, debugging, and monitoring facilitates straightforward container administration. To allow you to begin this journey, or perhaps to discuss your progress thus far, IBM Cloud Advisory Services is introducing the IBM Services for Private Cloud (ISPC) Adoption Workshop. This two-day workshop aims that can help you develop your roadmap to migrate and modernize your purposes and adopt private cloud primarily based on containers.

One answer to this problem is to use container-specific networking options. These provide a virtual community that containers can use to communicate with each other. They additionally allow you to management the network traffic between containers, bettering safety.

These let you store information outdoors of the container, guaranteeing its persistence even if the container is deleted. Containerization is a less heavy choice to full-machine virtualization, encapsulating an app in a container with its personal environment. Containerization is normally a useful gizmo so that you can improve the software program development lifecycle.

Cloud containerization reduces operational complexity, allowing groups to concentrate on innovation quite than infrastructure management. In cloud computing, containerization performs an important function in delivering scalable, portable Legacy Application Modernization, and environment friendly applications. Cloud containerization permits developers to build and deploy purposes in containers that can run across any cloud platform with out modification.

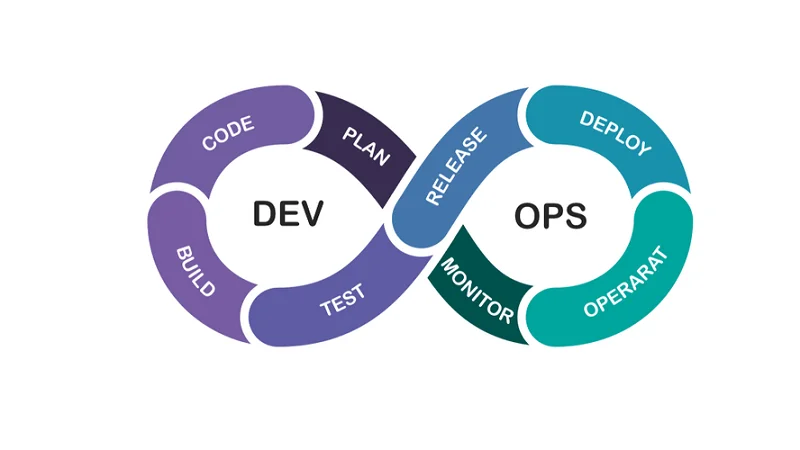

Traditional monolithic applications typically group all parts of an application—frontend, backend, and database—into a single unit, often resulting in challenges in scaling and maintenance. In this method, all software components—such as the person interface, enterprise logic, and database—were bundled into a single, unified codebase. While this methodology labored for smaller tasks, it turned increasingly troublesome to scale, keep, and deploy as techniques grew in complexity. Containers be sure that applications work uniformly across different environments. This reduces “it works on my machine” issues, making it easier for builders to write code and operations groups to handle applications. Ultimately, this enables DevOps groups to speed up the software program development lifecycle (SDLC) and iterate on software program sooner.

However, with containerization, you can create a single software program bundle, or container, that runs on all kinds of gadgets and working methods. Docker simplifies software deployment with lightweight, transportable containers, making certain consistency, scalability and effectivity throughout environments. It can run on just about any infrastructure, from bare-metal servers to digital machines to cloud platforms. It also helps a variety of container runtimes, including Docker, containerd, and CRI-O. The runtime is responsible for every little thing from pulling and unpacking container photographs to operating containers and handling their output. It also handles community interfaces for containers, and ensures they’ve access to necessary resources like file methods and units.

Change management processes are in accordance with the earlier setting the place companies integrate further resources or integrate new providers. However, speedy deployment and rollbacks are difficult in a conventional setup as a end result of tightly coupled providers. Also, there are multiple configurations, libraries, and dependencies to handle rollout and rollbacks. CHG used containerization of workloads to reduce back the deployment time throughout platforms from three weeks to a day.